The Truth about Brutal Honesty

Brutal honesty has come up several times recently in the context of the NYT “exposé” on the Amazon work environment. I don’t have any first hand knowledge of working at Amazon, and I agree that the NYT piece was strangely vindictive. Highly mobile professionals with 6-figure salaries don’t need our protection from Bezos or anyone else. They can vote with their feet.

But I also think that the concept of brutal honesty is idealized as a way to create an efficient and transparent work environment where information flows without impediment. In my experience of working at two large corporations, the reality was much more nuanced than that.

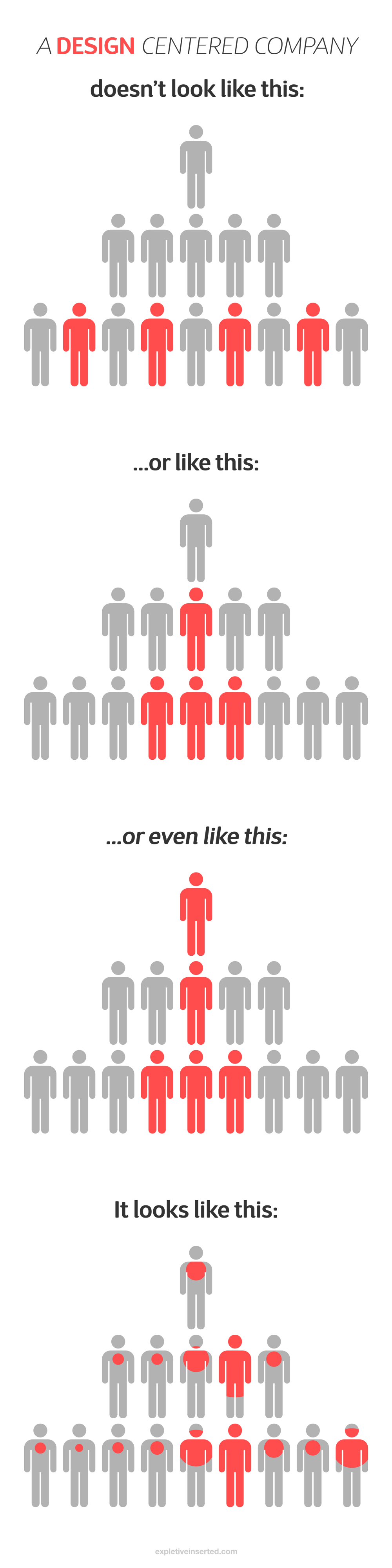

The ability to be honest is asymmetrical. People with power (positional, political, or otherwise) can afford to be honest. Most often, people without power can not. Or at the very least they have to be much more careful about how they express themselves. This way, organizations that are “brutally honest” often reinforce the power structure, rather than keep it in check. Instead of facilitating a better flow of information, they create different kinds of blockages. Privilege is amplified, not neutralized.

“Brutal” is sometimes literal. Amazon is often compared to an earlier Microsoft. The aspect of early Microsoft culture being referred to wasn’t defined so much by the “honest” way people expressed themselves, as it was by the “brutal” way they did so. Shouting, even screaming, at subordinates in product reviews was not unusual. This behavior didn’t have much to do with honesty though. It was management by intimidation.

“Honesty” is sometimes an excuse for ignorance. I have worked with talented people who constantly complained about getting into trouble because they were being too honest — people couldn’t deal with the truth. Sometimes this was true, but most often they were blind to some dimensions of the problem. Like the developer who sees only the engineering perspective and calls the marketing team bozos. Most often the marketing team are just smart people working within their own constraints. Constraints the engineer doesn’t understand.

“Honesty” is sometimes an excuse for bad behavior. If your intent is to build your reputation by tearing down a coworker in front of their peers and manager, then some brutal honesty in a group setting is effective. But if your intent is it to make a better product and build good working relationships, then a non-confrontational private meeting is effective. This is orthogonal to honesty.

Honesty is temporal. Speed is often confused with quality when it comes to thinking in a corporate setting. Some people have the ability to very quickly package a thought and sell it verbally. By speaking out first they dominate the flow of interaction in group settings, and in their desire to do so, they often package up very early thinking. Somewhere else in the meeting someone is being more thoughtful and going deeper, but by the time their thought is packaged and ready, it is too late to jump in. The truth is that on any topic our thinking will evolve over a scale of minutes, hours, days and even months. We can choose the point at which we express our current “honest” point of view.

None of this is to say that honesty isn’t important. As with the often repeated story of Steve Jobs chiding Jony Ive for not being brutally honest with a subordinate, being dishonest is seldom constructive. But there is a lot of nuance in the who, the to whom, the how and the when of expressing an honest opinion.

Honesty is an input, not an outcome. Outcomes are user experiences, revenues, and the happiness and satisfaction people derive from making those things.

Posted: September 8th, 2015 under Motherhood & Apple Pie.

Comments/Pingbacks/Trackbacks: none