Native Versus Web: A Moment In Time

Whenever I see punditry about web versus app, or worse, web versus mobile, I see a graph in my mind’s eye. It is inspired by disruption theory, but I’m not a deep student of Christensen’s work so please don’t read his implicit support into anything I’m writing here.

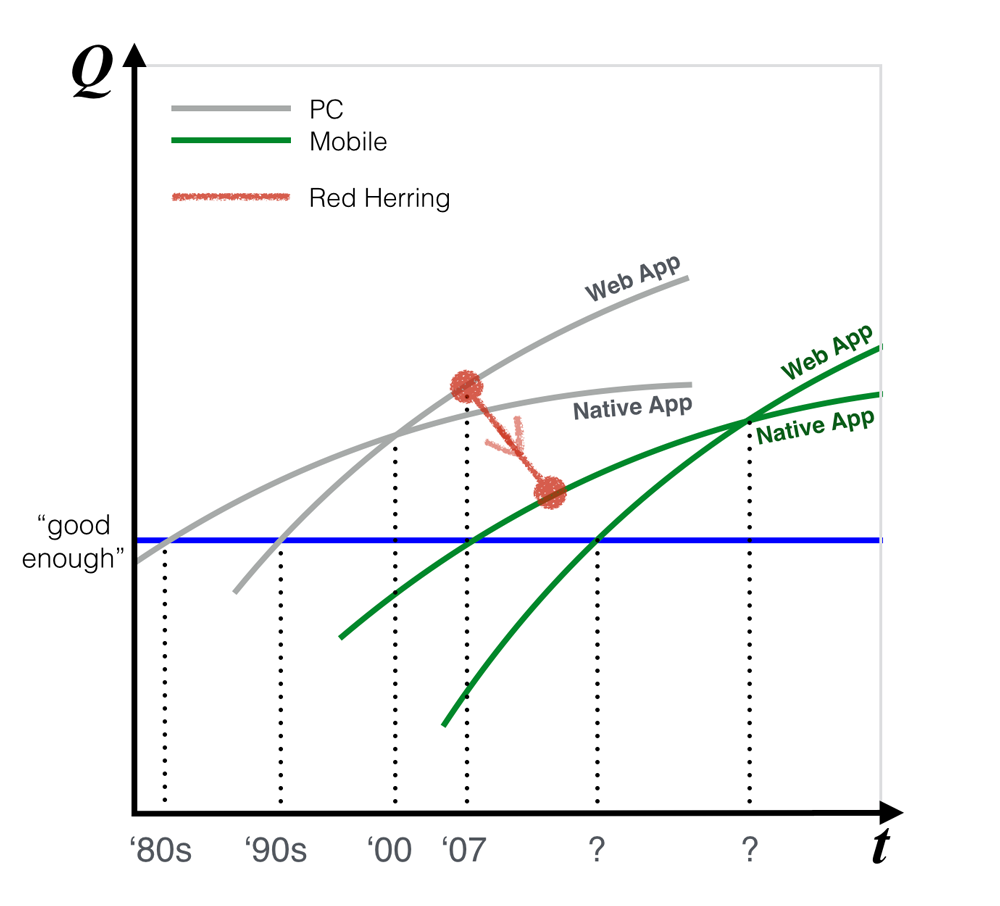

Skipping to the punchline, here’s that graph.

For those who don’t find it self-explanatory, here’s the TLDR;

Quality of UX Over Time

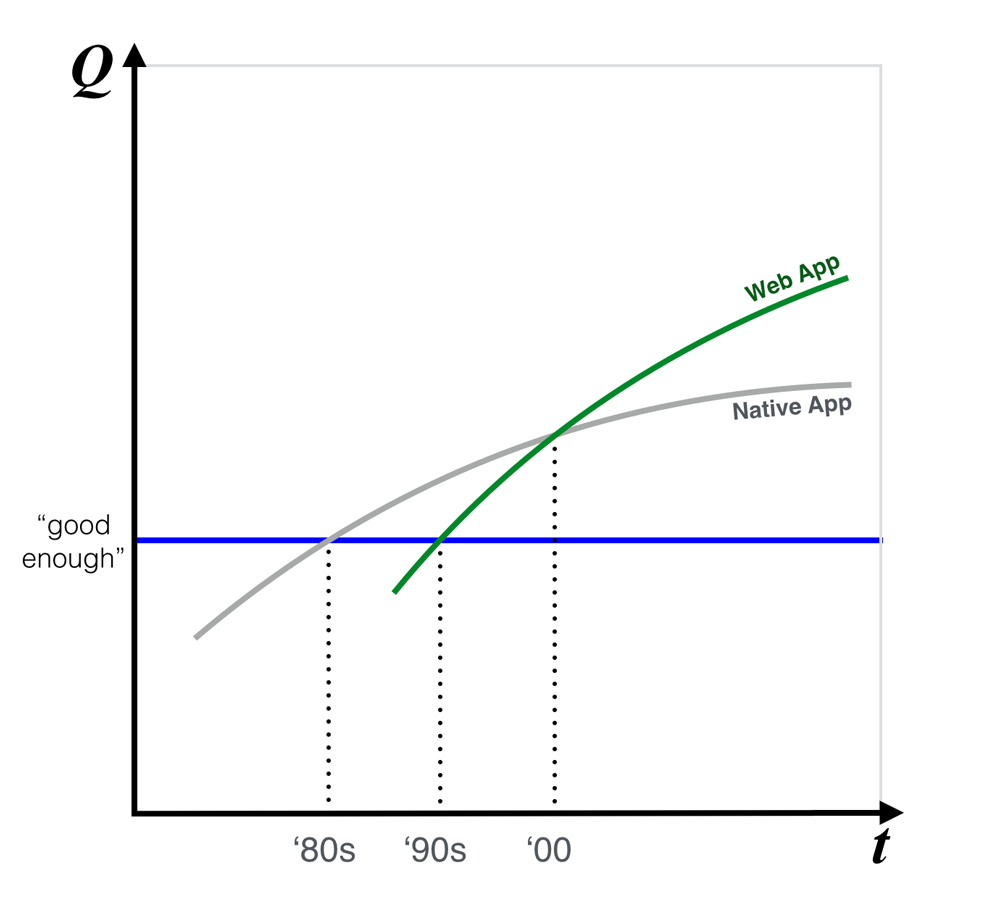

It starts with a simplistic graph of quality over time for app user experience on computers. Sometime in the early ’80s apps went mainstream in the sense that many consumers were using and purchasing them.

The exact date really isn’t important. The point is that apps exceeded a certain threshold in quality when they became “good enough” for mass adoption. An exact definition of “quality” also isn’t important, but it would encompass all aspects of the user experience in a very broad sense. That is, not only ease of use in the user interface, but also things like installation and interoperation with hardware and other software.

To further state the obvious:

- This curve is conceptual, not based on accurate measurements of quality.

- This is an aggregate curve, but curves would be different for different classes of application.

- “good enough” might not be constant, and is probably rising over time along with our expectations.

The Internet Arrives

At some point in the mid to late ‘90s the web was good enough for a lot of things and started inflating the first tech bubble as people switched to lower cost web apps. By the mid 2000’s, the web had all but destroyed the economy for consumer native apps on Windows. With the exceptions of hard core gaming, productivity software and professional apps, most new experiences were being delivered via web apps. Even in those areas, web based alternatives were starting to challenge the entrenched native app incumbents (e.g. Google Docs versus Microsoft Office).

iPhone and a New Set of Curves

In 2006 Steve Jobs unveiled the iPhone and opened the door to mainstream mobile computing. Initially 3rd party apps could only be web based, but soon there was an SDK and native apps were possible. What followed was a remarkable explosion of innovation and consumption as the iPhone — and then Android — liberated our connected computing experiences from desktop and laptop PCs.

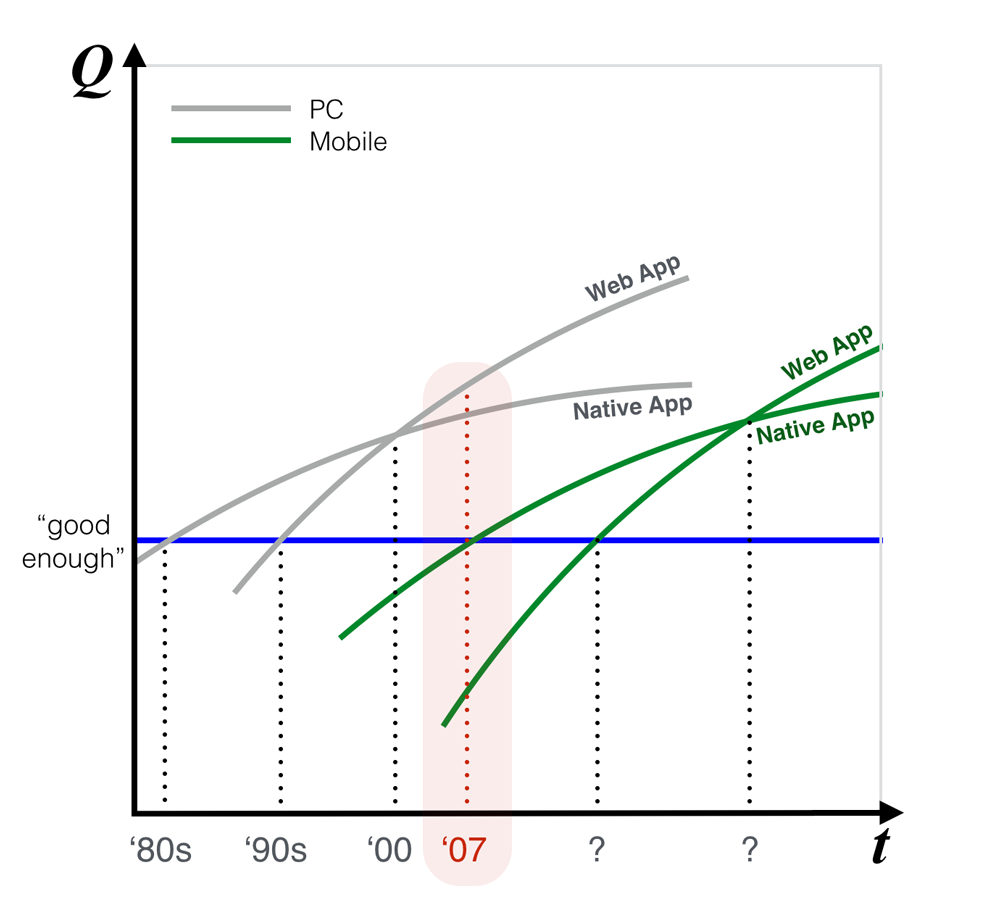

This is where things got interesting. The fundamentally different user experience offered by the opportunities (ubiquity, location) and limitations (size, network quality) of mobile devices meant that we had jumped to a new set of quality curves. And on these new curves we had regressed to a situation where the web wasn’t good enough and native apps were once again dominant.

Today native apps offer a far superior experience in most cases. There are some exceptions, like news, where the advantages of surfing from web page to web page reading hyperlinked articles outweighs the performance benefits of installing all the apps for your favorite news sites. But for the most part the native apps are better. Will this be true forever?

I think that’s a risky bet to make. It’s not a question of if, but rather when web apps will be good enough for most purposes on mobile devices. The words “good enough” are important here. Although my graph has web apps overtaking native apps at some point, maybe they don’t need to. It is possible that good enough, combined with cost savings driven by developing apps without platform imposed restrictions and without revenue share arrangements (e.g Apple’s 30% share of developer revenues), will be sufficient to send the mainstream back to web apps.

The red arrow I have added in this final graph represents the reason I think so many observers draw the wrong conclusions. Web apps went from unquestioned supremacy on PCs to second rate experiences on mobile devices. At the same time, good native apps on mobile devices catalyzed the explosion of the smart phone market. If you only look at a small piece of the elephant you can misinterpret what you see, and in this case people are misinterpreting this moment in time shift from web to mobile, or from web pages to apps, as unidirectional trends.

Web and native are just two different ways of building and distributing apps. They both have advantages and disadvantages. Right now, in the relatively early stages of the phone as computing device, the web isn’t a good enough platform to deliver most mobile experiences. Web apps on phones are jerky and slow and encumbered by browser chrome that was inherited from the desktop. They don’t have good access to local hardware resources. But the trajectory is clear — things are improving rapidly in all these areas.

The Web is Independence

All of the above is predicated on the assumption that a distinction between web and native apps will still make sense in the future. Perhaps the future proof way to look at this is to view “native” as a proxy for experiences that are delivered via a platform owner acting as gatekeeper, and the “web” as a proxy for experiences that are delivered to consumers across platforms, with seamless interconnectivity, and unencumbered by the restrictions put in place by platform owners.

Put differently, the web represents independence from platform owners. It offers incredible freedom to build what you to want build, and to ship when you are ready to ship, without any gatekeepers. While I love my native apps today, I believe in the long term potential of this freedom. Other problems that native app stores solve today — and there are many, like discoverability, security and peace of mind — will be solved on the web in ways that won’t require a platform overlord.

—

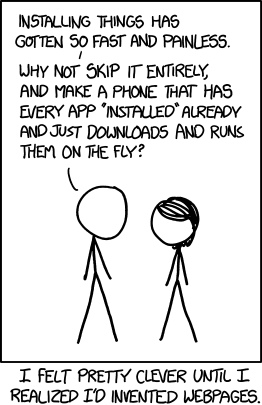

By @xkcdComic, via @duppy:

Posted: July 10th, 2014 under Mobile, The Wild Web.